The Tool That Shapes the Hand: Teaching Resilience in the Age of AI

Every educator knows a quiet truth: tools do not simply help us do things, they teach us how to be.

A pencil encourages patience. A calculator shifts how we approach memory. A search engine rewires how we ask questions. Artificial intelligence is no different, except in speed, scale, and intimacy.

To understand AI’s impact on learners, it helps to step back and remember that humans are, by nature, osmotic. We absorb the patterns, incentives, and “vibes” of the systems around us. Over the past twenty years, social media has subtly trained us to write for likes, think in soundbites, and perform for algorithms. Many of those changes, attention fragmentation, comparison culture, performative identity, are now woven into daily life and difficult to undo.

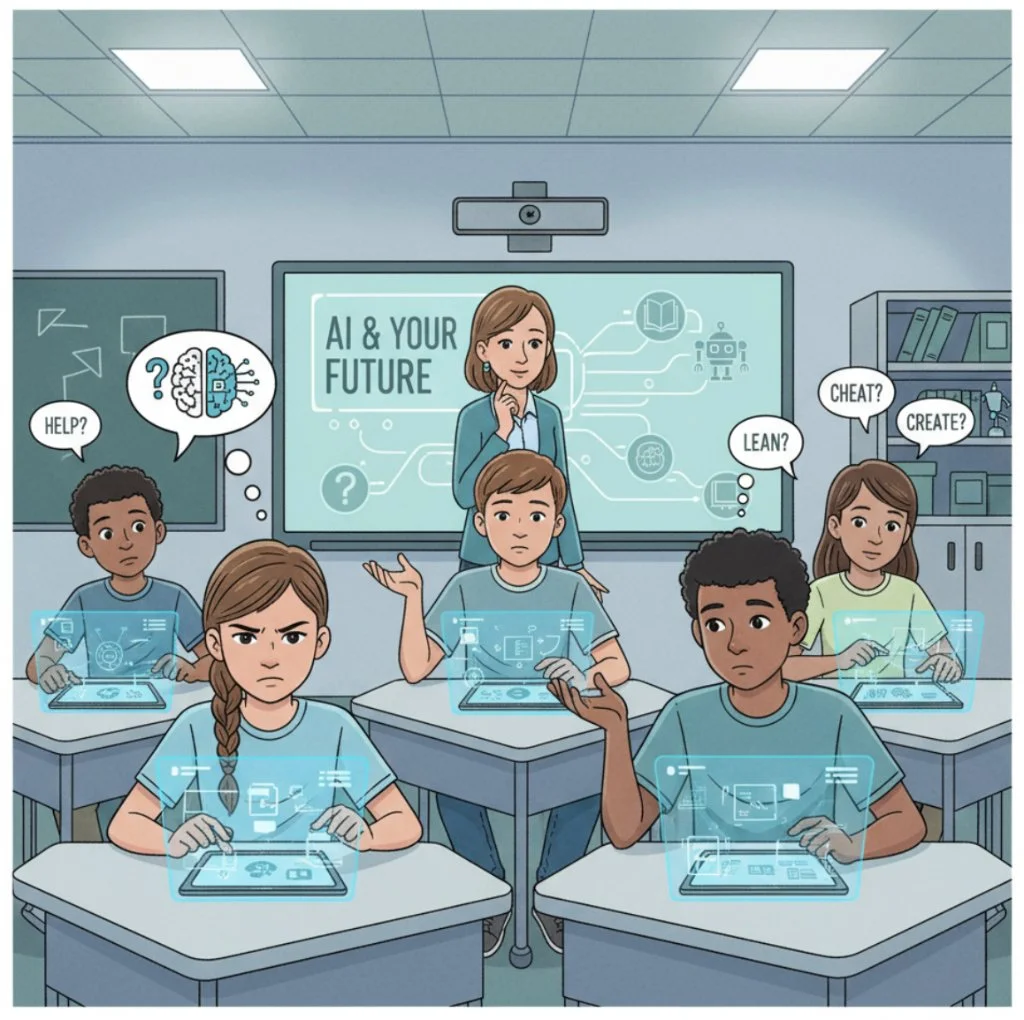

AI arrives carrying similar risks, but also unprecedented potential. For educators, the question is not whether AI will shape students, but what kind of humans they will become as a result.

Short-Term Impacts: Convenience, Confidence, and Cognitive Offloading

In the short term, AI acts like a remarkably helpful teaching assistant. Students can draft faster, summarise complex texts, translate ideas, and receive instant feedback. For many learners, especially those with language barriers or learning differences, this can be empowering and confidence-building. But there is a shadow side. When answers arrive instantly, struggle shortens. When phrasing is optimised by machines, students may begin to distrust their own voice. Early habits matter: if learners associate thinking with prompting rather than grappling, curiosity can quietly atrophy.

At this stage, the goal is guided friction. Teachers can normalise AI as a support tool, while still requiring visible thinking, drafts, reflections, oral explanations, and process journals. Skills like questioning, reasoning, and revision must remain explicitly human-led.

Medium-Term Impacts: Shaped Behaviors and Algorithmic Adaptation

Over time, users begin to adapt themselves to the tool. Just as social media rewarded outrage, brevity, or conformity, AI systems may subtly encourage clarity over originality, efficiency over depth, and predictability over risk. Students may start writing for AI: phrasing ideas in ways that feel “machine-legible,” choosing safer answers that align with training data rather than exploring unconventional perspectives. Some may prefer AI interaction to human feedback, less vulnerable, less messy, less judgmental.

This is where character education becomes inseparable from digital literacy. Learners must be taught:

How AI is trained (and what it cannot know)

How bias, optimisation, and probability shape outputs

How to disagree, refine, and resist suggestions, even when they sound confident

Classrooms can model this by asking: When should we not use AI? and What would a human notice here that a machine might miss?

Long-Term Impacts: Identity, Agency, and Irreversibility

The most profound effects of AI may only become visible decades later. Tools that accompany us daily don’t just influence productivity, they influence identity. If AI becomes the default thinking partner, future adults may struggle with solitude, uncertainty, or moral ambiguity. Creativity could become more curatorial than generative. Decision-making might lean toward optimisation rather than wisdom. At a societal level, AI amplifies a critical feature of social media: it can influence people ultra-personally and systemically at the same time. Personalised persuasion, automated dependency, and invisible nudging raise stakes not just for learning, but for democracy, agency, and human dignity.

Education must aim beyond competence toward resilience of character. This includes:

Intellectual humility (knowing when not to trust outputs)

Moral reasoning (asking not just “can we?” but “should we?”)

Emotional resilience (tolerating uncertainty and imperfection)

A strong sense of authorship and responsibility

Edufiction as a Bridge: Teaching Through Story

One powerful way to cultivate these traits is through edufiction, stories that embed factual understanding inside human narratives. Imagine students reading about a character who relies on AI for every decision, only to face a moment when the system fails or a story where a community must decide whether efficiency is worth the loss of human connection. Stories allow learners to feel the implications of technology, not just analyse them. They create emotional memory, empathy, and ethical imagination—qualities no algorithm can automate.

Setting the Tone Now

We are still in the early days of the AI age. History teaches us that the norms set now, by teachers, schools, families, and communities, will harden into systems later. Social media showed us what happens when education lags behind adoption. For educators, this moment is not about fear or rejection. It is about intentional formation.

AI should help students think better, not less.

It should support learning without replacing struggle.

And it must always serve the development of wise, resilient humans, not the other way around.

If we teach skills alone, students may adapt.

If we teach character alongside skills, they will endure.

And that may be the most important lesson of all.